Climate Machine Learning Applications

ICCS Summer school 2023

2024-06-09

Teaching Material Recap

Teaching Material Recap

Over the ML sessions at the summer school we have learnt about:

- Classification - categorising items based on information

- Regression - using information to predict another value

using:

- ANNs - using input features to make predictions

- CNNs - using image-like data as an input

Considerations

Image-like data

Potential Applications

Applications in geosciences:

See review of Kashinath et al. (2021)

- Emulation of existing parameterisations

(Espinosa et al. 2022)

- Data-driven paramterisations

(Yuval and O’Gorman 2020; Giglio, Lyubchich, and Mazloff 2018)

- Downscaling/Upsampling

(Harris et al. 2022)

- Time series forecasting

(Shao et al. 2021)

- Equation discovery

(Zanna and Bolton 2020; Ma et al. 2021)

- Complete forecasting

(Rasp et al. 2020; Pathak et al. 2022; Bi et al. 2022)

Climate Modelling

Climate models are large, complex, many-part systems.

Paramterisations

- Parameterisations are typically expensive

- Microphysics and Radiation are top offenders

- Replace parameterisations with NNs

- emulation of existing parameterisation

e.g. Espinosa et al. (2022) - data-driven parameterisations

- capture missing physics?

- train with a high-resolution model

access the benefits of subgrid model without the cost(?)

- emulation of existing parameterisation

Machine Learning

We typically think of Deep Learning as an end-to-end process;

a black box with an input and an output.

Who’s that Pokémon?

Who’s that Pokémon?

\[\begin{bmatrix}\vdots\\a_{23}\\a_{24}\\a_{25}\\a_{26}\\a_{27}\\\vdots\\\end{bmatrix}=\begin{bmatrix}\vdots\\0\\0\\1\\0\\0\\\vdots\\\end{bmatrix}\] It’s Pikachu!

Neural Net by 3Blue1Brown under fair dealing.

Pikachu © The Pokemon Company, used under fair dealing.

Machine Learning in Science

Neural Net by 3Blue1Brown under fair dealing.

Pikachu © The Pokemon Company, used under fair dealing.

Replacing physics-based components

2 approaches:

- emulation, or

- data-driven.

Additional challenges:

- Physical compatibility

- Physics-based models have conservation laws

Required for accuracy and stability

- Physics-based models have conservation laws

- Language interoperation

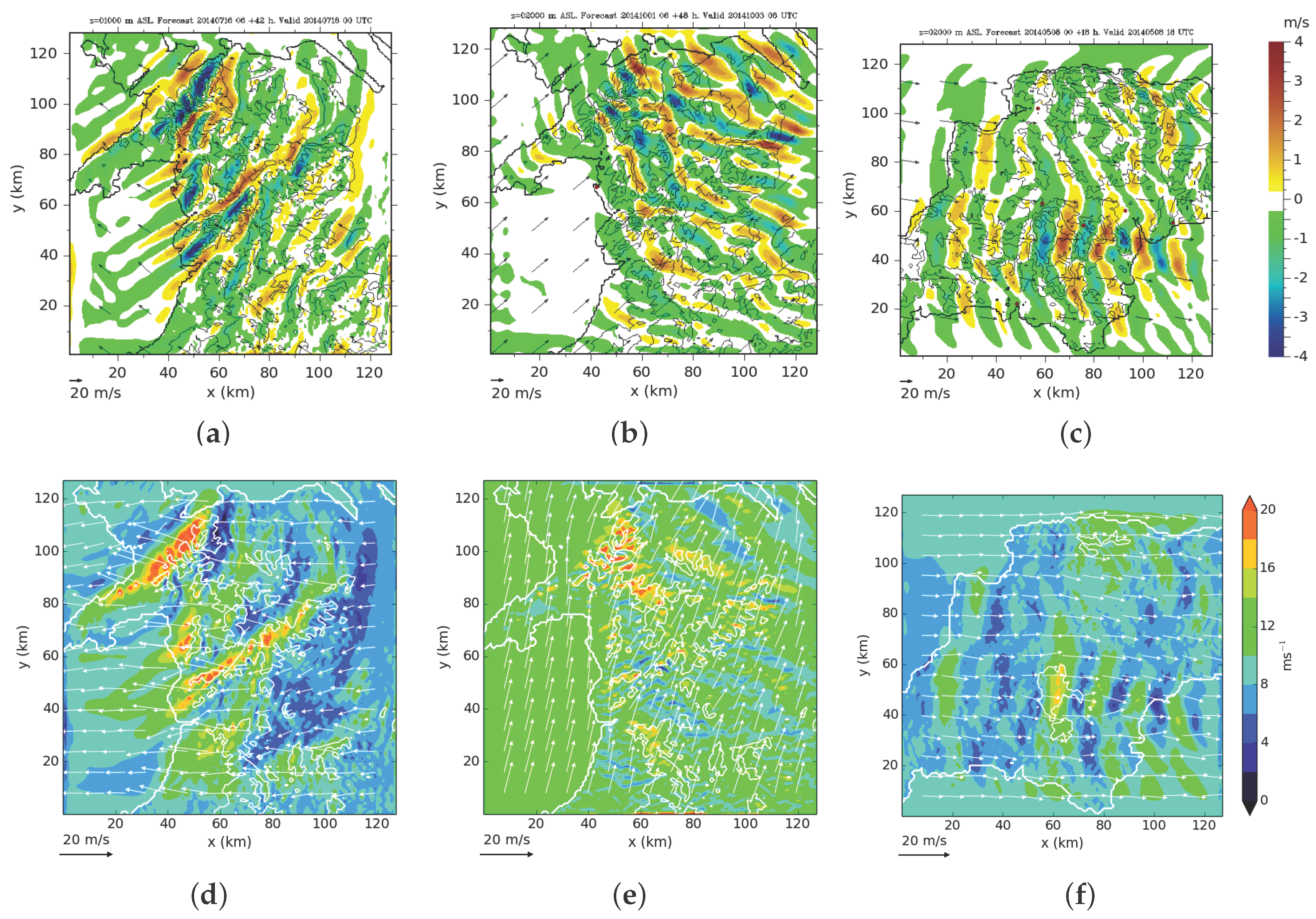

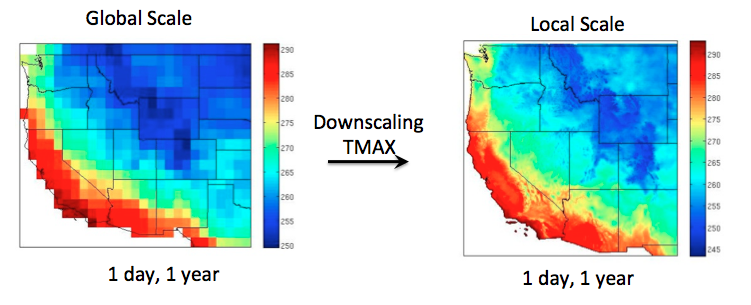

Downscaling

- Can we get information for ‘free’?

- Train to predict ‘image’ from coarsened version.

- Topography?

Image by Earth Lab

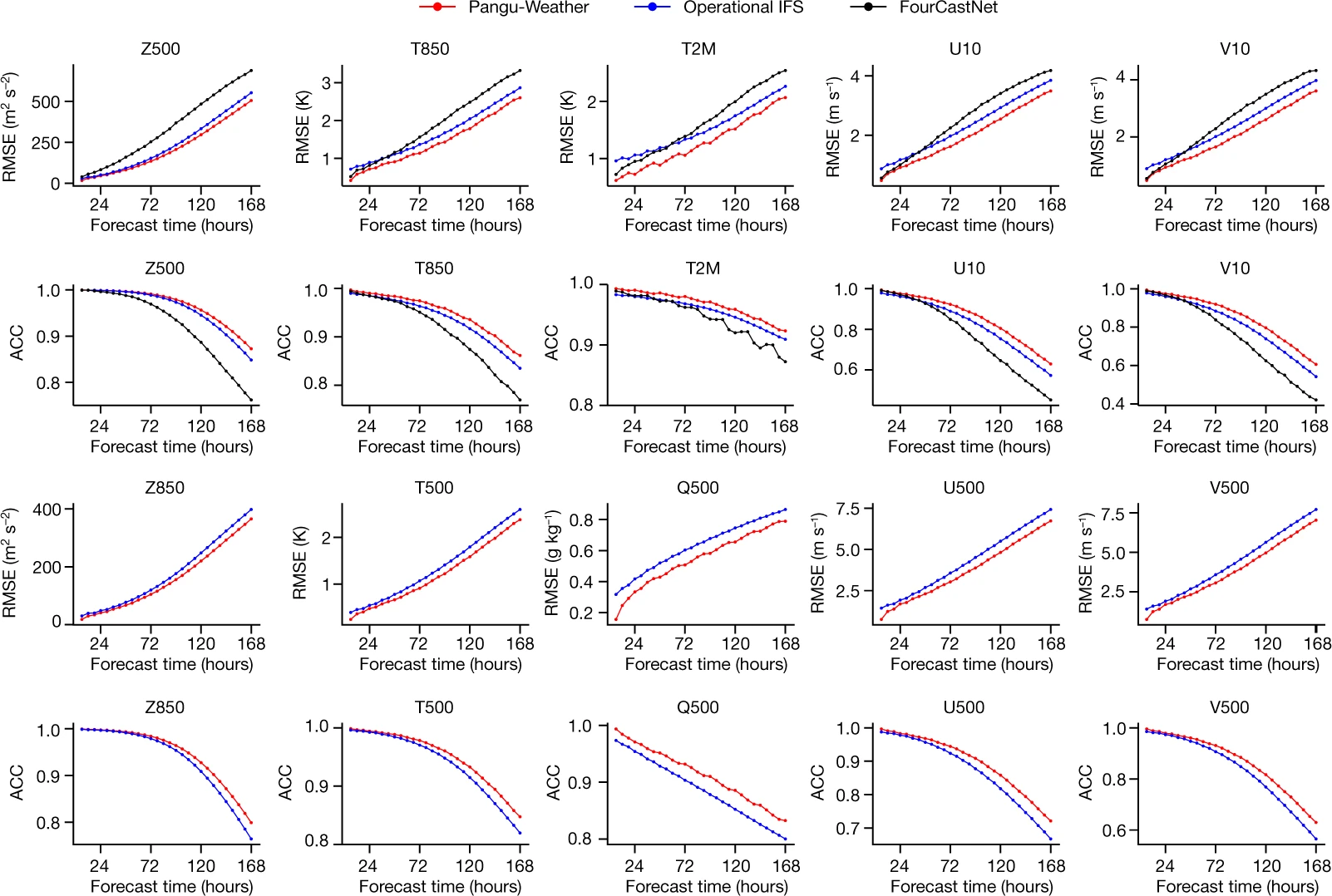

Forecasting

- Time-series

- popular use

- Recurrent Neural Nets

- Complete weather

- FourCastNet, Pangu-Weather, GraphCast

Line plot image from Bi et al. (2023)

Global image from NVIDIA FourCastNet

Challenges

Training data - considerations

How should we prepare our training data?

- Cyclic data?

- e.g. diurnal, annual, other

- use time as an input

- use a [daily] average

Training data - implications

- A NN only knows as much as its training data.

- How do you predict the 1/100 event? 1/1000 event?

- How do you train for a changing climate?

- And tipping points?

Image by NASA

Structure/Physics-informed approach

There is a wide variety of ways to structure a Neural Net.

What is the most appropriate for our application.

What are potentiall pitfalls - don’t go in blind with an ML hammer!

Case study of Ukkonen (2022) for emulating radiative transfer:

- Recurrent Neural Network reflects physical propogation,

- and prevents spurious correlations.

Physical Compatibility

Many ML applications in climate science are more complex than other classical applications.

- our ML useage is often not end-to-end

- A stable/accurate offline model will not neccessarily be stable online (Furner et al. 2023).

Your NN is perfectly happy to have ‘negative rain’.

- Even with heavy penalties

- This is not a new problem in numerical parameterisations.

- How is it best to enforce physical constraints in NNs.

Redeployability

How easy is it to redeploy a ML model? - exactly what has it learned?

- Locked to a geographical location?

- Locked to numerical model?

- Locked to a specific grid!?

- How do we handle inputs from different models?

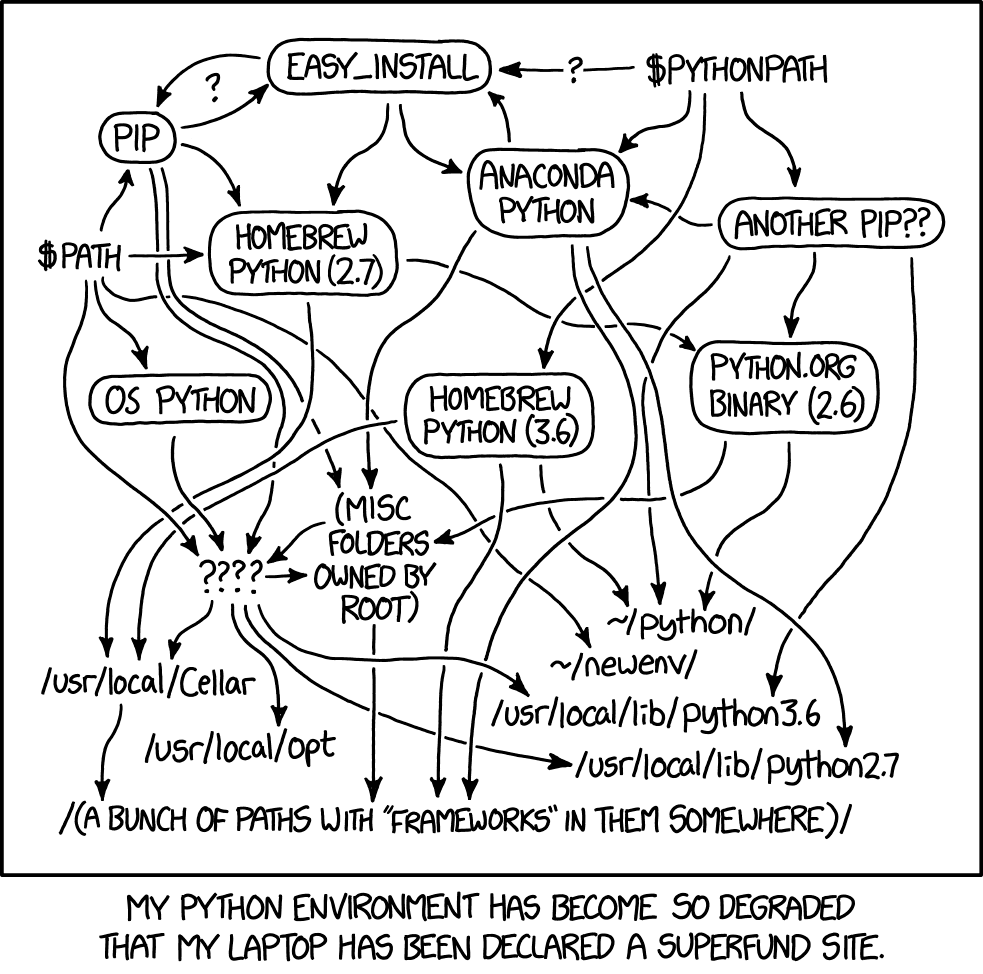

Interfacing

Replacing physics-based components of larger models (emulation or data-driven) requires care.

- Language interoperation

- Physical compatibility

Mathematical Bridge by cmglee used under CC BY-SA 3.0

![]()

Interfacing - Possible solutions

Ideally need to:

- Not generate excess additional work for user

- Not require excess knowledge of computing

- Minimal learning curve

- Not add excess dependencies

- Be easy to maintain

- Maximise performance

Interfacing - Possible solutions

- Implement a NN in Fortran

- Forpy/CFFI

- SmartSim/Pipes

- Fortran-Keras Bridge

Interfacing - Our Solution

Python

env

Python

runtime

xkcd #1987 by Randall Munroe, used under CC BY-NC 2.5

Interfacing - Our Solution

Ftorch and TF-lib

- Use Fortran’s intrinsic C-bindings to access the C/C++ APIs provided by ML frameworks

- Performance

- Ease of use

- Use frameworks’ implementations directly

Interfacing - Our Solution

Ftorch and TF-lib

- Use Fortran’s intrinsic C-bindings to access the C/C++ APIs provided by ML frameworks

- Performance

- avoids python runtime

- no-copy transfer (shared memory)

- Ease of use

- Use frameworks’ implementations directly

Interfacing - Our Solution

Ftorch and TF-lib

- Use Fortran’s intrinsic C-bindings to access the C/C++ APIs provided by ML frameworks

- Performance

- Ease of use

- pleasant API (see next slides)

- utilities for generating TorchScript/TF module provided

- examples provided

- Use frameworks’ implementations directly

Interfacing - Our Solution

Ftorch and TF-lib

- Use Fortran’s intrinsic C-bindings to access the C/C++ APIs provided by ML frameworks

- Performance

- Ease of use

- Use frameworks’ implementations directly

- feature support

- future support

- direct translation of python models 1

Code Example - PyTorch

- Take model file

- Save as torchscript

Code Example - PyTorch

Neccessary imports:

use, intrinsic :: iso_c_binding, only: c_int64_t, c_float, c_char, &

c_null_char, c_ptr, c_loc

use ftorchLoading a pytorch model:

Code Example - PyTorch

Tensor creation from Fortran arrays:

! Fortran variables

real, dimension(:,:), target :: SST, model_output

! C/Torch variables

integer(c_int), parameter :: dims_T = 2

integer(c_int64_t) :: shape_T(dims_T)

integer(c_int), parameter :: n_inputs = 1

type(torch_tensor), dimension(n_inputs), target :: model_inputs

type(torch_tensor) :: model_output_T

shape_T = shape(SST)

model_inputs(1) = torch_tensor_from_blob(c_loc(SST), dims_T, shape_T &

torch_kFloat64, torch_kCPU)

model_output = torch_tensor_from_blob(c_loc(output), dims_T, shape_T, &

torch_kFloat64, torch_kCPU)Code Example - PyTorch

Running the model

Cleaning up:

Further information

References

Slides

These slides can be viewed at:

https://cambridge-iccs.github.io/slides/ml-training/applications.html

The html and source can be found on GitHub

Contact

For more information we can be reached at:

Jack Atkinson

You can also contact the ICCS, make a resource allocation request, or visit us at the Summer School RSE Helpdesk.