Coupling Machine Learning to Numerical (Climate) Models: Tools, Challenges, and Lessons Learned

2024-06-03

Precursors

Slides and Materials

To access links or follow on your own device these slides can be found at:

jackatkinson.net/slides

Licensing

Except where otherwise noted, these presentation materials are licensed under the Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0) License.

Vectors and icons by SVG Repo under CC0(1.0) or FontAwesome under SIL OFL 1.1

Weather, Climate, and Machine Learning

The first weather model

In ~1916 Lewis Fry Richardson1 attemted2 to compute a 1 day forecast by hand using partial differential equations3.

He went on to publish Weather Prediction by Numerical Process (Richardson 1922)

Images from the Met Office in the UK, Fair Use.

The first weather model

In Weather Prediction by Numerical Process (Richardson 1922) LFR envisaged a “Forecast Factory”.

He lived to see this realised, albeit in a different setting, on ENIAC1 by Charney, the von Neumans et al. in 1950.

ENIAC by US serviceperson, Public Domain.

The Forecast Factory by Stephen Conlin (1986), Fair Use.

Models today

Climate models are large, complex, many-part systems.

Parameteristion

Subgrid processes are largest source of uncertainty

Microphysics by Sisi Chen Public Domain

Staggered grid by NOAA under Public Domain

Globe grid with box by Caltech under Fair use

Parameteristion

Subgrid processes are largest source of uncertainty

Microphysics by Sisi Chen Public Domain

Staggered grid by NOAA under Public Domain

Globe grid with box by Caltech under Fair use

Machine Learning in Science

We typically think of Deep Learning as an end-to-end process;

a black box with an input and an output1.

Who’s that Pokémon?

Who’s that Pokémon?

\[\begin{bmatrix}\vdots\\a_{23}\\a_{24}\\a_{25}\\a_{26}\\a_{27}\\\vdots\\\end{bmatrix}=\begin{bmatrix}\vdots\\0\\0\\1\\0\\0\\\vdots\\\end{bmatrix}\] It’s Pikachu!

Neural Net by 3Blue1Brown under fair dealing.

Pikachu © The Pokemon Company, used under fair dealing.

Machine Learning in Science

Neural Net by 3Blue1Brown under fair dealing.

Pikachu © The Pokemon Company, used under fair dealing.

Challenges

- Reproducibility

- Ensure net functions the same in-situ

- Re-usability

- Make ML parameterisations available to many models

- Facilitate easy re-training/adaptation

- Language Interoperation

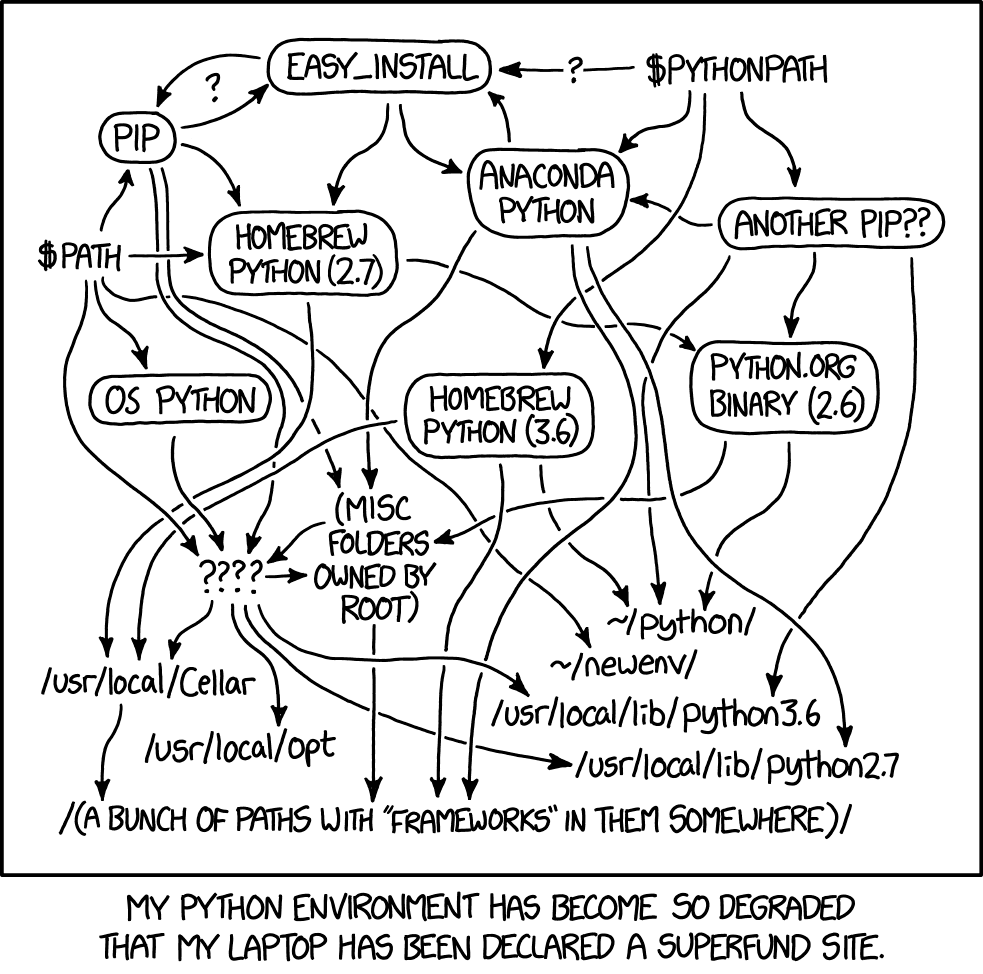

Language interoperation

Many large scientific models are written in Fortran (or C, or C++).

Much machine learning is conducted in Python.

![]()

![]()

Mathematical Bridge by cmglee used under CC BY-SA 3.0

PyTorch, the PyTorch logo and any related marks are trademarks of The Linux Foundation.”

TensorFlow, the TensorFlow logo and any related marks are trademarks of Google Inc.

Possible solutions

- Implement a NN in Fortran

- Additional work, reproducibility issues, hard for complex architectures

- Forpy

- Easy to add, harder to use with ML, GPL, barely-maintained

- SmartSim

- Python ‘control centre’ around Redis: generic/versatile, learning curve, data copying

- Fortran-Keras Bridge

- Keras only, abandonware(?)

Efficiency

We consider 2 types:

Computational

Developer

At the academic end of research both have an equal effect on ‘time-to-science’.

Especially when extensive research software support is unavailable.

FTorch

Approach

- PyTorch (and TensorFlow) have C++ backends and provide APIs.

- Binding Fortran to C is straightforward1 from 2003 using

iso_c_binding.

We will:

- Archive PyTorch model as Torchscript

- to be run by

libtorchC++

- to be run by

- Provide Fortran API

- wrapping the

libtorchC++ API - abstracting complex details from users

- wrapping the

Approach

Python

env

Python

runtime

xkcd #1987 by Randall Munroe, used under CC BY-NC 2.5

Highlights - Developer

- Easy to clone and install

- CMake, supported on linux/unix and Windows™

- Easy to link

Build using CMake,

or link via Make like NetCDF (instructions included)

FCFLAGS += -I<path/to/install>/include/ftorch LDFLAGS += -L<path/to/install>/lib64 -lftorch

Find it on :

Highlights - Developer

- User tools

pt2ts.pyaids users in saving PyTorch models to Torchscript

- Examples suite

- Take users through full process from trained net to Fortran inference

- Full API documentation online at

cambridge-iccs.github.io/FTorch

- FOSS

- licensed under MIT

- contributions from users via GitHub welcome

Find it on :

Highlights - Computation

- Use framework’s implementations directly

- feature and future support, and reproducible

- Make use of the Torch backends for GPU offload

- CUDA, MPS enabled

- XPU work in progress

- No-copy access in memory (on CPU).

- Indexing issues and associated reshape1 avoided with Torch strided accessor.

Find it on :

Highlights - Computation

- No-copy access in memory (on CPU).

- Indexing issues and associated reshape1 avoided with Torch strided accessor.

Find it on :

Some code

Tensors

use, intrinsic :: iso_fortran_env, only : sp => real32

! Use the FTorch Library

use :: ftorch

implicit none

! Fortran variables

real(sp), dimension(1,3,244,244), target :: in_data

real(sp), dimension(1, 1000), target :: out_data

integer, parameter :: n_inputs = 1

integer :: in_layout(4) = [1,2,3,4]

integer :: out_layout(2) = [1,2]

! Torch Tensors

type(torch_tensor), dimension(1) :: in_tensors

type(torch_tensor) :: out_tensor

! Populate Fortran data

call random_number(in_data)

! Cast Fortran data to Tensors

! Create input/output tensors from the above arrays

in_tensors(1) = torch_tensor_from_array(in_data, in_layout, torch_kCPU)

out_tensor = torch_tensor_from_array(out_data, out_layout, torch_kCPU)Model - Saving from Python

import torch

import torchvision

# Load pre-trained model and put in eval mode

model = torchvision.models.resnet18(weights="IMAGENET1K_V1")

model.eval()

# Create dummmy input

dummy_input = torch.ones(1, 3, 224, 224)

# Trace model and save

traced_model = torch.jit.trace(model, dummy_input)

frozen_model = torch.jit.freeze(traced_model)

frozen_model.save("/path/to/saved_model.pt")

TorchScript

- Statically typed subset of Python

- Read by the Torch C++ interface (or any Torch API)

- Produces intermediate representation/graph of NN

- Including weights and biases

- Trace for simple models, script also available

Model - Loading and running

Loading

! Define a Torch module

type(torch_module) :: model

! Load in from Torchscript

model = torch_module_load('/path/to/saved_model.pt')

Cleaning up

! Cleanup

call torch_module_delete(model)

call torch_tensor_delete(in_tensors(1))

call torch_tensor_delete(out_tensor)

! Use Fortran array `out_data` elsewhere in codeGPU Acceleration

Cast Tensors to GPU in Fortran:

! Load in from Torchscript

model = torch_module_load('/path/to/saved/gpu/model.pt', torch_kCUDA, device_index=0)

! Cast Fortran data to Tensors

in_tensor(1) = torch_tensor_from_array(in_data, in_layout, torch_kCUDA, device_index=0)

out_tensor = torch_tensor_from_array(out_data, out_layout, torch_kCPU)

Effective HPC simulation requires MPI_Gather() for efficient data transfer.

Applications and Case Studies

MiMA - proof of concept

- The origins of FTorch

- Emulation of existing parameterisation

- Coupled to an atmospheric model using

forpyin Espinosa et al. (2022)1 - Prohibitively slow and hard to implement

- Asked for a faster, user-friendly implementation that can be used in future studies.

- Follow up paper using FTorch: Uncertainty Quantification of a Machine Learning Subgrid-Scale Parameterization for Atmospheric Gravity Waves (Mansfield and Sheshadri 2024)

- “Identical” offline networks have very different behaviours when deployed online.

ICON

- Icosahedral Nonhydrostatic Weather and Climate Model

- Developed by DKRZ (Deutsches Klimarechenzentrum)

- Used by the DWD and Meteo-Swiss

- Interpretable multiscale Machine Learning-Based Parameterizations of Convection for ICON (Heuer et al. 2023)1

- Train U-Net convection scheme on high-res simulation

- Deploy in ICON via FTorch coupling

- Evaluate physical realism (causality) using SHAP values

- Online stability improved when non-causal relations are eliminated from the net

![]()

CESM coupling

- The Community Earth System Model

- Part of CMIP (Coupled Model Intercomparison Project)

- Make it easy for users

- FTorch integrated into the build system (CIME)

libtorchis included on the software stack on Derecho- Improves reproducibility

Derecho by NCAR

CESM - Bias Correction

Work by Will Chapman of NCAR/M2LInES

As representations of physics models have inherent, sometimes systematic, biases.

Run CESM for 9 years relaxing hourly to ERA5 observation (data assimilation)

Train CNN to predict anomaly increment at each level

- targeting just the MJO region

- targeting globally

Apply online as part of predictive runs

- Low hanging fruit: Don’t load model (with all its weights) at every timestep!

ML Component Design/Packaging

ML parameterisations in CAM

Convection

- With Paul O’Gorman (MIT) and Judith Berner (NCAR)

M2LInES VESRI Project - Precipitation is tricky to get right in GCMs

“too little too often” - Train in high-res. convection-resolving model

Gravity Waves

- With Pedram Hassanzadeh and Qiang Sun (Chicago) and Joan Alexander (NWRA)

DataWave VESRI Project - Gravity waves are challenging to represent accurately in GCMs

- Train in high-res. WRF model

(data-driven in future?)

Gravity waves by NASA/JPL - Public Domain

Deep convection by Hussein Kefel - Creative Commons

The challenges

Packaging:

When ML components are developed they typically have a:

normalisation routine

neural network architecture

de-normalisation routine

Enforcing of physical constraints

- e.g. conservation laws

Several constituents to be transferred to host model.

Portability:

A neural net trained on a different model/dataset requires:

- Input data of the same format as the training model:

- grid resolution

- physical variables

- data type

to function correctly.

Convection in CAM

Neural Network model trained in SAM (Yuval and O’Gorman 2020)

- System for Atmospheric Modelling

- Convection-Resolving Model (CRM)

To be re-deployed in CAM

- Community Atmosphere Model of CESM

- Global model

- \(\Delta x \approx 100 \, m\)

- \(\Delta x \approx 50-100 \, km\)

- \(\Delta z \approx 50-100 \, m\)

- \(\Delta z \approx 10-20 \, hPa\)

- Temperature, humidity

- Energy, vapour, rain, ice

Outputs are of the form \(\partial / \partial t\) so need to apply, convert, and difference.

Software architecture for ML parameterisations

The parameterisation:

- Pure neural net core

- TorchScript net coupled using FTorch

- Easily swapped out as new nets trained or architecture changed

- Physics layer

- Handles physical constraints and non-NN aspects of parameterisation

e.g. Conservation laws.

- Handles physical constraints and non-NN aspects of parameterisation

- Provided with a clear API of expected:

- variables, units, and grid/resolution

- appropriate parameter ranges

Software architecture for ML parameterisations

The coupling:

- Host model

- Interface layer

- Passes data from/to host model

- Handles physical variable transformations and regridding

- Passes data to/from parameterisation

Summary

- Use of ML within traditional numerical models

- A growing area that presents challenges

- Language interoperation

- FTorch provides a solution for scientists looking to implement torch models in Fortran

- Designed with both computational and developer efficiency in mind

- Has helped deliver science in climate research and beyond

(Heuer et al. (2023), Mansfield and Sheshadri (2024)) - Built into CESM to allow the userbase access

- Coupling design

- Careful thought can help when coupling ML and numerics

- Adopt nested design with pure-ML core

Thanks

ICCS Research Software Engineers:

- Chris Edsall - Director

- Marion Weinzierl - Senior

- Jack Atkinson - Senior

- Matt Archer - Senior

- Tom Meltzer - Senior

- Surbhi Ghoel

- Tianzhang Cai

- Joe Wallwork

- Amy Pike

- James Emberton

- Dominic Orchard - Director/Computer Science

Previous Members:

- Paul Richmond - Sheffield

- Jim Denholm - AstraZeneca

FTorch:

- Jack Atkinson

- Simon Clifford - Cambridge RSE

- Athena Elafrou - Cambridge RSE, now NVIDIA

- Elliott Kasoar - STFC

- Joe Wallwork

- Tom Meltzer

MiMA

- Minah Yang - NYU, DataWave

- Dave Conelly - NYU, DataWave

CESM

- Will Chapman - NCAR/M2LInES

- Jim Edwards - NCAR

- Paul O’Gorman - MIT, M2LInES

- Judith Berner - NCAR, M2LInES

- Qiang Sun - U Chicago, DataWave

- Pedram Hassanzadeh - U Chicago, DataWave

- Joan Alexander - NWRA, DataWave

Thanks for Listening

Get in touch:

The ICCS received support from

References

Strong Scaling

Wilkes3 (CSD3)

- 3rd Generation AMD EPYC 64-Core CPUs

- NVIDIA A100-SXM-80GB GPUs

Observations:

- Data transfer to GPU becomes important

- Suggest using

MPI_gatherto reduce overheads

- Suggest using

- CPU Net scales well

Benchmarking

Following the comparisons and MiMA experiments we performed detailed benchmarking to examine the library performance.