Reducing the Overhead of Coupled Machine Learning Models between Python and Fortran

RMetS Early Career Conference, Reading

NVIDIA

NYU

2024-06-09

Machine Learning in geophysical sciences

Machine learning is everywhere

Some applications in geosciences: 1

- Emulation of existing parameterisations

(Espinosa et al. 2022)

- Data-driven paramterisations

(Yuval and O’Gorman 2020; Giglio, Lyubchich, and Mazloff 2018)

- Downscaling/Upsampling

(Harris et al. 2022)

- Time series forecasting

(Shao et al. 2021)

- Equation discovery

(Zanna and Bolton 2020; Ma et al. 2021)

- Complete forecasting

(Rasp et al. 2020; Pathak et al. 2022; Bi et al. 2022)

Challenges

Replacing physics-based components of larger models (emulation or data-driven).

- Language interoperation

- Physical compatibility

Mathematical Bridge by cmglee used under CC BY-SA 3.0

![]()

Solutions

Possible solutions

Ideally need to:

- Not generate excess additional work for user

- Not require excess knowledge of computing

- Minimal learning curve

- Not add excess dependencies

- Be easy to maintain

- Maximise performance

Possible solutions

- Implement a NN in Fortran

- Forpy/CFFI

- SmartSim/Pipes

- Fortran-Keras Bridge

Possible solutions

- Implement a NN in Fortran

- e.g.

inference-engine,neural-fortran, own solution etc. - how do you ensure you port it correctly?

- ML libraries are highly optimised, probably more so than your code.

- e.g.

- Forpy/CFFI

- SmartSim/Pipes

- Fortran-Keras Bridge

Possible solutions

- Implement a NN in Fortran

- Forpy/CFFI

- easy to add

forpy.modfile and compile - need to manage and link python environment

- increases dependencies

- easy to add

- SmartSim/Pipes

- Fortran-Keras Bridge

Possible solutions

- Implement a NN in Fortran

- Forpy/CFFI

- SmartSim/Pipes

- pass data between workers through a network glue layer

- steep learning curve

- involves data copying

- Fortran-Keras Bridge

Possible solutions

- Implement a NN in Fortran

- Forpy/CFFI

- SmartSim/Pipes

- Fortran-Keras Bridge

- pure Fortran

- inactive and incomplete

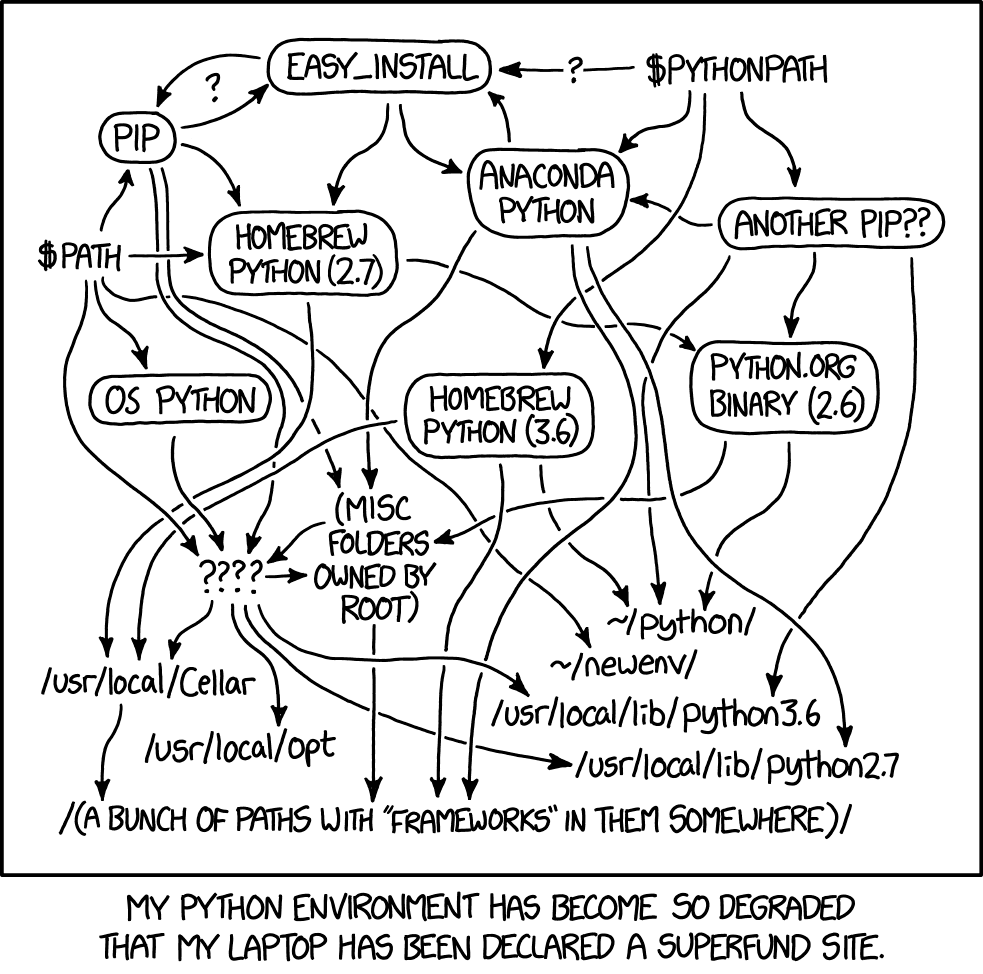

Our Solution

Python

env

Python

runtime

xkcd #1987 by Randall Munroe, used under CC BY-NC 2.5

Our Solution

Ftorch and TF-lib

- Use Fortran’s intrinsic C-bindings to access the C/C++ APIs provided by ML frameworks

- Performance

- Ease of use

- Use frameworks’ implementations directly

Our Solution

Ftorch and TF-lib

- Use Fortran’s intrinsic C-bindings to access the C/C++ APIs provided by ML frameworks

- Performance

- avoids python runtime

- no-copy transfer (shared memory)

- Ease of use

- Use frameworks’ implementations directly

Our Solution

Ftorch and TF-lib

- Use Fortran’s intrinsic C-bindings to access the C/C++ APIs provided by ML frameworks

- Performance

- Ease of use

- pleasant API (see next slides)

- utilities for generating TorchScript/TF module provided

- examples provided

- Use frameworks’ implementations directly

Our Solution

Ftorch and TF-lib

- Use Fortran’s intrinsic C-bindings to access the C/C++ APIs provided by ML frameworks

- Performance

- Ease of use

- Use frameworks’ implementations directly

- feature support

- future support

- direct translation of python models 1

Code Example - PyTorch

- Take model file

- Save as torchscript

Code Example - PyTorch

Neccessary imports:

use, intrinsic :: iso_c_binding, only: c_int64_t, c_float, c_char, &

c_null_char, c_ptr, c_loc

use ftorchLoading a pytorch model:

Code Example - PyTorch

Tensor creation from Fortran arrays:

! Fortran variables

real, dimension(:,:), target :: SST, model_output

! C/Torch variables

integer(c_int), parameter :: dims_T = 2

integer(c_int64_t) :: shape_T(dims_T)

integer(c_int), parameter :: n_inputs = 1

type(torch_tensor), dimension(n_inputs), target :: model_inputs

type(torch_tensor) :: model_output_T

shape_T = shape(SST)

model_inputs(1) = torch_tensor_from_blob(c_loc(SST), dims_T, shape_T &

torch_kFloat64, torch_kCPU)

model_output = torch_tensor_from_blob(c_loc(output), dims_T, shape_T, &

torch_kFloat64, torch_kCPU)Code Example - PyTorch

Running the model

Cleaning up:

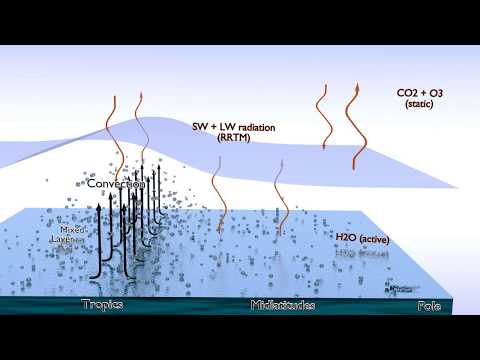

Application

Gravity Wave parameterisation in MiMA

- Model of an idealised Moist Atmosphere (Jucker and Gerber 2017)

- Aquaplanet model with moisture and mixed-layer ocean

- Successful implementation of gravity waves produces QBO 1

Tests

Investigate the coupling between Fortran, and the PyTorch and Tensorflow models.

- Replace AD99 GW parameterisation in MiMA

- Run MiMA for 3 years1

- Time just the GW parameterisation

- Compare online simulation results to an AD99 run

- Tensorflow and PyTorch

- Old forpy and our new couplings

Results

Time-height sections of zonal-mean zonal wind (m/s) averaged between ±5o longitude

Timings (real seconds) for computing gravity wave drag.1

| Forpy | Direct | % Direct/Forpy | |

|---|---|---|---|

| PyTorch | 94.43 s | 134.81 s | 142.8 % |

| TensorFlow | 667.16 s | 170.31 s | 25.5 % |

Conclusions

Take away messages

- Machine learning has many potential applications to enhance NWP and ESM

- Leveraging it effectively requires care

- We have developed libraries to allow scientists to easily and efficiently deploy ML within Fortran models

- For new projects we advise using PyTorch

Software

- The libraries presented can be found at:

- Torch: https://github.com/Cambridge-ICCS/fortran-pytorch-lib

- TensorFlow: https://github.com/Cambridge-ICCS/fortran-tf-lib

- MIT licensed

- Documentation provided

- Their implementation in the MiMA model can be found at

https://github.com/DataWaveProject/MiMA-machine-learning

Future work

- Provide a tagged first release on github

- Publication through JOSS and zenodo

- Further test GPU functionalities

- Implement functionalities beyond inference?

- Online training likely to become important

- Further testing by external users

- Help and feedback wanted!

References

Closing slide, thanks, and questions

Libraries can be found at:

Thanks to:

![]()

Get in touch:

Jack Atkinson

Slides available at: https://jackatkinson.net/slides/RMetS/RMetS.html