(ML) Parameterisations: Some Software Considerations

2024-07-08

Precursors

Slides and Materials

To access links or follow on your own device these slides can be found at:

jackatkinson.net/slides

Licensing

Except where otherwise noted, these presentation materials are licensed under the Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0) License.

Vectors and icons by SVG Repo under CC0(1.0) or FontAwesome under SIL OFL 1.1

Parameterisations

Parameterisations (literature)

To paraphrase Easterbrook (2023) a parameterisation is:

“A mini-model reducing a complex set of equations or processes to represent what is hapenning within each grid cell.”

Microphysics by Sisi Chen Public Domain

Globe grid with box by Caltech under Fair use

Parameterisations (literature)

To paraphrase Easterbrook (2023) a parameterisation is:

“a mini-model reducing a complex set of equations or processes to represent what is hapenning within each grid cell.”

Microphysics by Sisi Chen Public Domain

Globe grid with box by Caltech under Fair use

Parameterisations (literature)

To paraphrase Easterbrook (2023) a parameterisation is:

“Each handles a different process to be tested on its own before coupling back to the dynamic core.”

Parameterisations (literature)

To paraphrase Easterbrook (2023) a parameterisation is:

“regularly updated or replaced as modellers improve their understanding and find better methods.”

Parameterisations (reality)

Paper published with equations

Simple standalone test written

Plot of online deployment in a GCM

Missing key details

- order of operations

- numerical considerations

- scheme switching

- barrier to reproducibility

RSE Unicorn designed by Cristin Merritt.

Parameterisations (reality)

Parameterisations are often written tightly into models1

- Global variables

- Custom data structures

As a result parameterisations are often (re)-implemented for specific models.

- Barrier to:

- reusability

- scientific progress.

Some Suggestions

- Publish the standalone parameterisation code

- Include implementation details

- Isolate, coupling through interfaces1

- Provide a clear API of expected:

- variables, units, and grid/resolution

- appropriate parameter ranges

- Provide a clear API of expected:

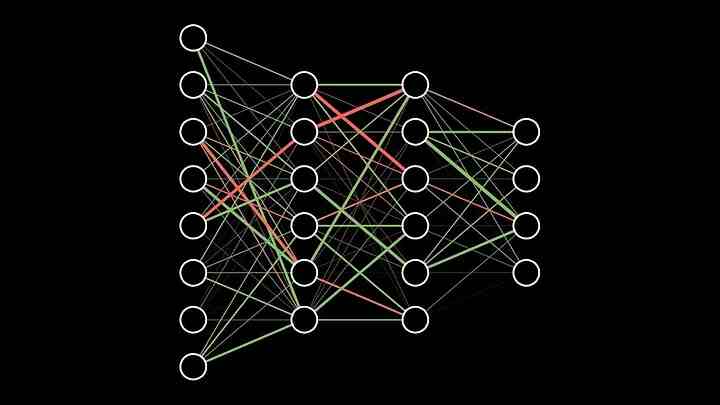

ML Parameterisation

ML Parameterisation

Challenges:

- Portability

- Reproducibility

- Physical compatibility

- Language interoperation

ML parameterisations in CAM

Convection

- With Paul O’Gorman (MIT) and Judith Berner (NCAR)

M2LInES - Precipitation is tricky in GCMs

“too little too often” - Train in high-res. convection-resolving model

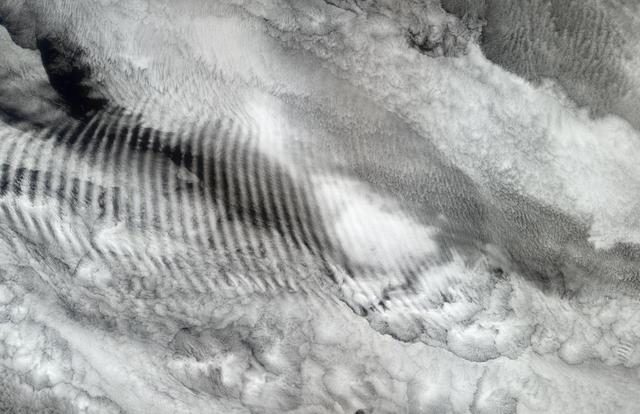

Gravity Waves

- With Pedram Hassanzadeh and Qiang Sun (Chicago) and Joan Alexander (NWRA) - DataWave

- Gravity waves are challenging to represent accurately in GCMs

- Train in high-res. WRF model

(data-driven in future?)

Gravity waves by NASA/JPL - Public Domain

Deep convection by Hussein Kefel - Creative Commons

FTorch

A software library developed at ICCS to tackle this X-VESRI1 challenge.

Provides an interface from Fortran to ML models saved in PyTorch

Available from :

github.com/Cambridge-ICCS/FTorch

FTorch Highlights

use ftorch

implicit none

real, dimension(5), target :: in_data, out_data ! Fortran data structures

type(torch_tensor), dimension(1) :: input_tensors, output_tensors ! Set up Torch data structures

type(torch_model) :: torch_net

integer, dimension(1) :: tensor_layout = [1]

in_data = ... ! Prepare data in Fortran

! Create Torch input/output tensors from the Fortran arrays

call torch_tensor_from_array(input_tensors(1), in_data, tensor_layout, torch_kCPU)

call torch_tensor_from_array(output_tensors(1), out_data, tensor_layout, torch_kCPU)

call torch_model_load(torch_net, 'path/to/saved/model.pt') ! Load ML model

call torch_model_forward(torch_net, input_tensors, output_tensors) ! Infer

call further_code(out_data) ! Use output data in Fortran immediately

! Cleanup

call torch_tensor_array_delete(input_tensors)

call torch_tensor_array_delete(output_tensors)

call torch_model_delete(torch_net)FTorch Outlook

Current topics:

- Increased engagement with projects.

- Investigations into online training.

- Bringing automatic differentiation to Fortran models via

torch.autograd1.

If you are interested or have feature requests/contributions please:

- Speak to us

- Book an ICCS Climate Code Clinic

(Summer school or online)

- Book an ICCS Climate Code Clinic

- Join the summer school session

Software architecture for ML parameterisations

The parameterisation:

- Pure neural net core1

- Easily swapped out as new nets trained or architecture changed

- Physics layer

- Handles physical constraints and non-NN aspects of parameterisation

e.g. Conservation laws. - Provided with a clear API:

- variables, units, resolution

- Handles physical constraints and non-NN aspects of parameterisation

Software architecture for ML parameterisations

The coupling:

- Interface layer

- Passes data from/to host model/parameterisation

- Handles physical variable transformations and regridding

- Handles generalisation

Net Architecture

We should operate a principle of separation between physical model and net.

Concatenation

- part of the NN, not part of the physics

Normalisation

- part of the NN, not part of the physics

The alternative is re-writing code to perform this in the physical model

- taking time,

- reducing reproducibility

- adding complexity.

Summary

“a mini-model reducing a complex set of equations or processes to represent what is hapenning within each grid cell.”

“Each handles a different process to be tested on its own before coupling back to the dynamic core.”

“regularly updated or replaced as modellers improve their understanding and find better methods.”

Summary

“a mini-model reducing complex behaviour representing what is hapenning within each grid cell.”

“Each handles a different process to be tested on its own before coupling back to the dynamic core.”

“regularly updated or replaced as modellers improve their understanding and find better methods.”

Summary

“a mini-model reducing complex behaviour representing what is hapenning within each grid cell.”

“architectured and trained in isolation or in situ, to be (ported and) coupled back to a dynamic core.”

“regularly updated or replaced as modellers improve their understanding and find better methods.”1

Summary

Considered software design will speed up development and portability helping explore generalisation and stability, and further science.

Thanks

ICCS Research Software Engineers:

- Chris Edsall - Director

- Marion Weinzierl - Senior

- Jack Atkinson - Senior

- Matt Archer - Senior

- Tom Meltzer - Senior

- Surbhi Goel

- Tianzhang Cai

- Joe Wallwork

- Amy Pike

- James Emberton

- Dominic Orchard - Director/Computer Science

Previous Members:

- Paul Richmond - Sheffield

- Jim Denholm - AstraZeneca

FTorch:

- Jack Atkinson

- Simon Clifford - Cambridge RSE

- Athena Elafrou - Cambridge RSE, now NVIDIA

- Elliott Kasoar - STFC

- Joe Wallwork

- Tom Meltzer

MiMA

- Minah Yang - NYU, DataWave

- Dave Conelly - NYU, DataWave

CESM

- Will Chapman - NCAR, M2LInES

- Jim Edwards - NCAR

- Paul O’Gorman - MIT, M2LInES

- Judith Berner - NCAR, M2LInES

- Qiang Sun - U Chicago, DataWave

- Pedram Hassanzadeh - U Chicago, DataWave

- Joan Alexander - NWRA, DataWave

Thanks for Listening

Get in touch:

The ICCS received support from

These slides, and others, available at jackatkinson.net/slides

References